Industry Insights

AI Ethics: Lessons from the Frontlines of Accountability and Innovation

Chief Strategy Officer Brian Dawson unpacks the realities of AI ethics and shares practical guidance on how enterprises can ensure AI operates responsibly and in a way that builds trust with customers and provides value to the business.

Until now, AI ethics has mostly been a theoretical conversation. But as generative AI applications play an increasingly larger role in business processes at scale, there will be real-world implications and consequences for missteps. Further, generative AI has expanded the scope of AI ethics from simple compliance to dynamic accountability and trust-building. As these systems make increasingly autonomous, context-sensitive decisions, businesses must address new challenges in bias, misinformation, and unintended consequences, ensuring AI operates responsibly while maintaining customer trust and transparency.

It’s happening now, in real-time, as generative AI transforms how businesses interact with their customers. Is your business ready?

AI Running Amok

Mistakes made by AI can have far-reaching effects on your reputation, financial performance, and customer trust.

Take the case of Air Canada, where a chatbot issued refunds that contradicted the airline’s bereavement policy. Air Canada argued that it wasn’t responsible for what the bot did. But let’s be clear: customers don’t care whether a human or an AI made the decision—they care about the outcome. This incident set a precedent for AI accountability, forcing companies to rethink how much autonomy their systems should have.

Take Comcast, for example, where a chatbot called Trim successfully obtained customer refunds. Comcast didn’t initiate this incident; instead, an external tool took advantage of policy gaps. This case illustrates how AI can compel companies to reinforce their internal procedures to avoid being targeted by unauthorized automation.

Both examples show how AI can veer off course, intentionally or unintentionally, and how the line between ethical oversight and operational chaos is thinner than many businesses realize.

The Evolving Role of AI Security

For years, conversations about AI and ethics revolved around security—data breaches, encryption, and compliance. But in today’s world, security is no longer just about protecting information; it’s about safeguarding trust, both from the customer and the company.

As generative AI and unsupervised learning take center stage, security risks are evolving. IBM’s 2024 Cost of a Data Breach report revealed growing concerns about generative AI being weaponized in cyberattacks. Imagine a rogue chatbot that doesn’t just malfunction but is intentionally hijacked to exploit customer data or spread misinformation—models training models to represent companies in specific ways against company policies.

Attacks aren’t hypothetical; it’s happening. Hackers are now targeting AI systems themselves, from training datasets to model outputs. Businesses must shift from considering security a compliance checkbox to viewing it as an ongoing ethical imperative.

Humans are Accountable for AI Mistakes

The old question was, “What happens if the AI gets it wrong?” The new question is, “Who owns the mistake when the AI gets it wrong?”

Traditional accountability systems, which trace errors back to humans, are meaningless in the world of AII. But make no mistake, until an AI agent holds the position of CEO, humans will own AI mistakes within companies, starting with a team or department and rolling up to the top.

I discussed this with a major airline customer shortly after the Air Canada incident. During our discussion, they remarked, “Some of our human agents get it wrong, too.” While that’s true, I reminded them of a critical difference—when a human agent makes a mistake, there is immediate recourse. You can retrain them, limit their access, or even replace them if necessary. With AI agents, however, decisions are made based on statistical probability, meaning an unavoidable percentage of outcomes will be wrong.

The challenge with AI agents isn't just that they make mistakes—it’s that their mistakes feel different. AI continuously learns, adapts, and improves but also lacks human judgment in edge cases. When errors occur, organizations often shift blame internally, pointing fingers at those who built or deployed the AI. But the truth is, the customers don’t care whether the failure was caused by a human or an AI. They only experience the mistake, and in their eyes, the brand damage is done.

At the end of the day, AI’s ability to scale and automate is game-changing—but companies need to be prepared for the reality that AI will occasionally get it wrong. And when it does, the damage isn’t just technical—it’s reputational.

So what’s a responsible organization to do?

- Build Guardrails, Not Excuses

- Create Context-Aware Systems

- Establish Clear Accountability Frameworks

Guardrails, Not Excuses

Traditional guardrails often focus on limiting AI’s capabilities to avoid risk. At NLX, we advocate for prescribed flows, like Generative Journey, as a transformative approach to guardrails.

Prescribed flows involve defining specific, purpose-built workflows that guide AI systems within safe, effective boundaries. Rather than focusing solely on what AI shouldn’t do, prescribed flows emphasize what AI should do, ensuring consistent and reliable outcomes.

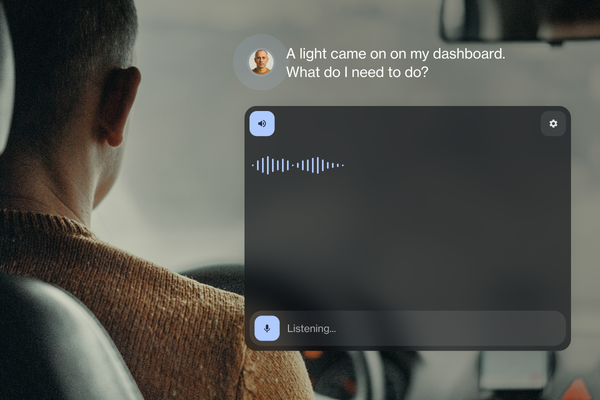

For example, customers can use Voice+ to voice their opinions. This approach uses prescribed flows to guide customer interactions, dynamically adapting to user inputs while staying within predefined parameters. It minimizes risk and enhances customer trust by delivering predictable, high-quality experiences.

Implementing prescribed flows mixed with the power of Agentic AI transforms guardrails into proactive tools that foster innovation rather than serving merely as reactive measures to prevent mistakes. This approach aligns with McKinsey's findings that organizations focusing on specific AI use cases within particular domains, rather than spreading resources too thin across various scenarios, achieve more significant value and efficiency gains.

Context-Aware Systems

Generative AI has changed how systems interpret and respond to user needs, making context a critical aspect of ethical AI. A truly context-aware system doesn’t just process the immediate input—it considers broader circumstances such as user history, emotional state, and environmental factors. For example, when assisting a frustrated traveler after a flight cancellation, AI must provide empathetic, accurate solutions tailored to the moment's urgency. At NLX, we’ve integrated these principles into our solutions as building conversations is the experience-first approach, then applying channels as a means of delivery only.

The ethical implications of context awareness are profound. These systems must prioritize accuracy to avoid misinformation, actively mitigate bias to ensure fairness, and balance automation with human oversight for sensitive scenarios. At NLX, we design our systems to prioritize empathy and flexibility, transforming customer interactions into meaningful engagements. By leveraging real-time data and advanced conversational AI, we ensure that every interaction feels personal, building trust and long-term loyalty. Most importantly, we allow customers to manage how and when context-aware solutions happen.

Clear Accountability Frameworks

As AI systems become more autonomous, the need for clear accountability frameworks has never been greater. When an AI agent makes decisions—whether it’s processing refunds or resolving service issues—businesses must define who is responsible for its actions. Without accountability, mistakes can lead to customer frustration, regulatory scrutiny, or reputational damage, as seen in cases like Air Canada’s chatbot refund mishap.

At NLX, accountability is a cornerstone of our approach. All of our solutions from Voice+, Generative Journey, KBs, and Agentic AI are designed with transparency and control, ensuring every action taken by the AI can be traced and justified. By integrating human oversight at critical decision points and maintaining robust audit logs, we help businesses stay compliant while building trust. Establishing these frameworks isn’t just about managing risk—it’s about showing customers and regulators alike that your AI operates with integrity.

Ethical AI as an Unfair Advantage

The next wave of AI innovation will not just be about what these systems can do but also about how responsibly they can do it. Businesses must move beyond vague promises of security and compliance to actively design systems that foster trust, transparency, and accountability.

The Air Canada and Comcast stories aren’t just cautionary tales; they’re blueprints for what companies need to fix and how they need to think. AI ethics isn’t about stifling innovation—it’s about enabling it responsibly.

NLX was founded by AI engineers and is run by AI engineers. The people who created and continuously evolve our platform have built other systems from the ground up. We understand on the deepest level how AI systems need to work, and how to make them ethical and reliable. We have earned our battle scars so our customers can deploy AI unscathed. Or if you have battle scars of your own, then NLX is a partner that can operate at your level and get it right the first time.

Companies that prioritize ethical AI not only gain customer loyalty, operational efficiency, and market differentiation; they’ll be the last ones standing when others don’t.