Product

How NLX became a game changer for LLMs & custom NLPs

Learn why NLX's Dialog Studio stands out as a rare platform for enterprises investing in conversational AI models.

Natural language processing (NLP) is the machine learning technology that typified human ingenuity - we imbued computers with the ability to understand our language the way we do so they could take on arduous tasks without the need for coffee breaks. Put simply, they’re superhuman interns. Put accurately, they interpret text or speech inputs from humans, which is an ability that has since expanded to translating languages and responding to infinite human commands. Conversational AI “chatbots” leverage NLP tech to not only understand human responses and identify the correct task required, but they do it all while simulating your speech, so you’re not struggling to converse with something incapable of anything other than binary beep boops.

Extending from this field of machine learning, Large language models (LLM) have become the next iteration of NLP, executing similar functions through the comprehension of human input and responding in a conversational manner. One critical difference, though, is how LLMs can predict and generate robust responses freely. They, too, are superhuman interns but with more autonomy in the workplace.

Off-the-shelf vs custom

It's been said that behind every good conversational AI builder is an NLP engine or LLM. This is true, as chatbots require them to be operational. An NLP helps route a user to any number of intents that fulfill an appropriate business action for the user's need. With an NLP, a bot processes your input to “book an appointment” and matches you with an intent designed to do just that. LLMs can also respond with a business action, but they still possess lower confidence than NLPs, as they may need to “guess” at the required response rather than relying on accuracy and prescribed logic. (They’re the intern that’s a bit green and takes a few risky chances, while their NLP counterpart is more prudent, probably outgrowing the position ages ago and ready for a promotion.)

In the case of NLPs, off-the-shelf varieties have been trained on general use cases, whereas custom NLPs decipher user inputs within a specific context and have been trained by particular datasets. This allows for better sentiment analysis and intent matching for the userbase in which they serve. We know words carry positive or negative sentiments depending on the context (e.g., “finish”) or depending on the industry (e.g., “abduction” in healthcare vs. “abduction” in law enforcement). Other elements such as a person’s region, device, and channel of communication also impact what words they use and how when relaying to a bot what they want.

Why does any of this matter? Because these factors precipitated the very decision for many enterprises to develop their own NLP. Or use an LLM. Or both.

But that presents a challenge.

The need for an agnostic platform

Discovering a conversational AI platform capable of integrating with a custom NLP was nearly impossible several years ago. Finding one that could also support an LLM in recent times meant you had better odds of discovering the lost city of Atlantis under your driver seat. This sparse reality suggested that tremendous amounts of data, algorithms, and semantic models were at risk, limited by what existing conversational AI platforms could handle. Until the launch of NLX’s conversational AI platform, Dialog Studio, in 2018. It was among the first to provide the capabilities required by companies investing millions in AI models.

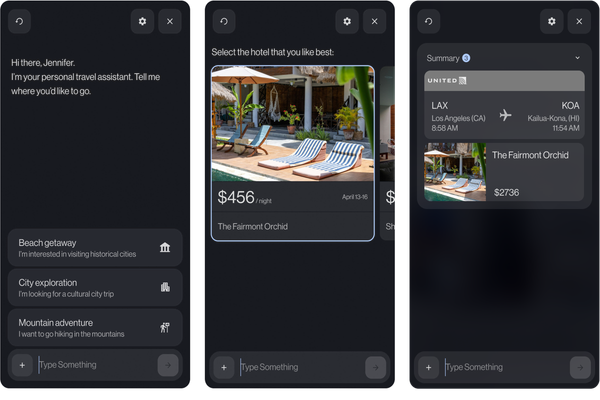

With a fully customizable environment, Dialog Studio remains one of the few conversational AI platforms that embraces the choice between off-the-shelf (e.g., Amazon Lex, Google Dialogflow) or custom NLP as well as supports the choice to adopt an LLM. Remaining true to the NLX vision established years ago, Dialog Studio bears an agnostic design, providing multiple endpoints for various integrations, including those for your own NLP engine or chosen LLM. This approach supports different client preferences that must account for type of business, cloud vendor, security requirements, and more.

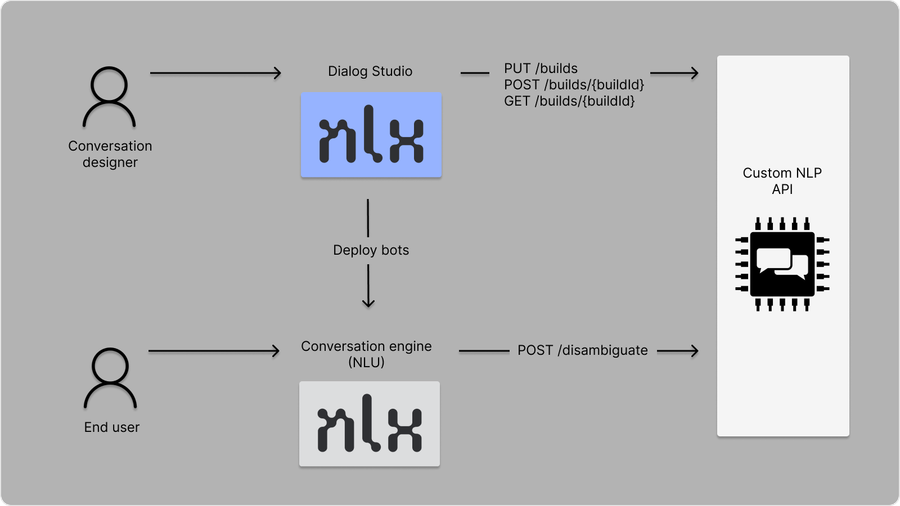

Dialog Studio's architecture

A modular architecture means Dialog Studio can interface with any NLP, custom or otherwise, and can extend support for LLM implementation in mere minutes. Using the provided specs and available settings, it takes as few steps to integrate a custom NLP, for example, as any off-the-shelf provider. Our Dialog Studio implementation assures your custom model correctly interfaces for basic runtime operations, utterance disambiguation, and packaging your intents into each bot build.

To learn more and indulge in possibility over capability with NLX’s Dialog Studio, please book a call with us.