Product

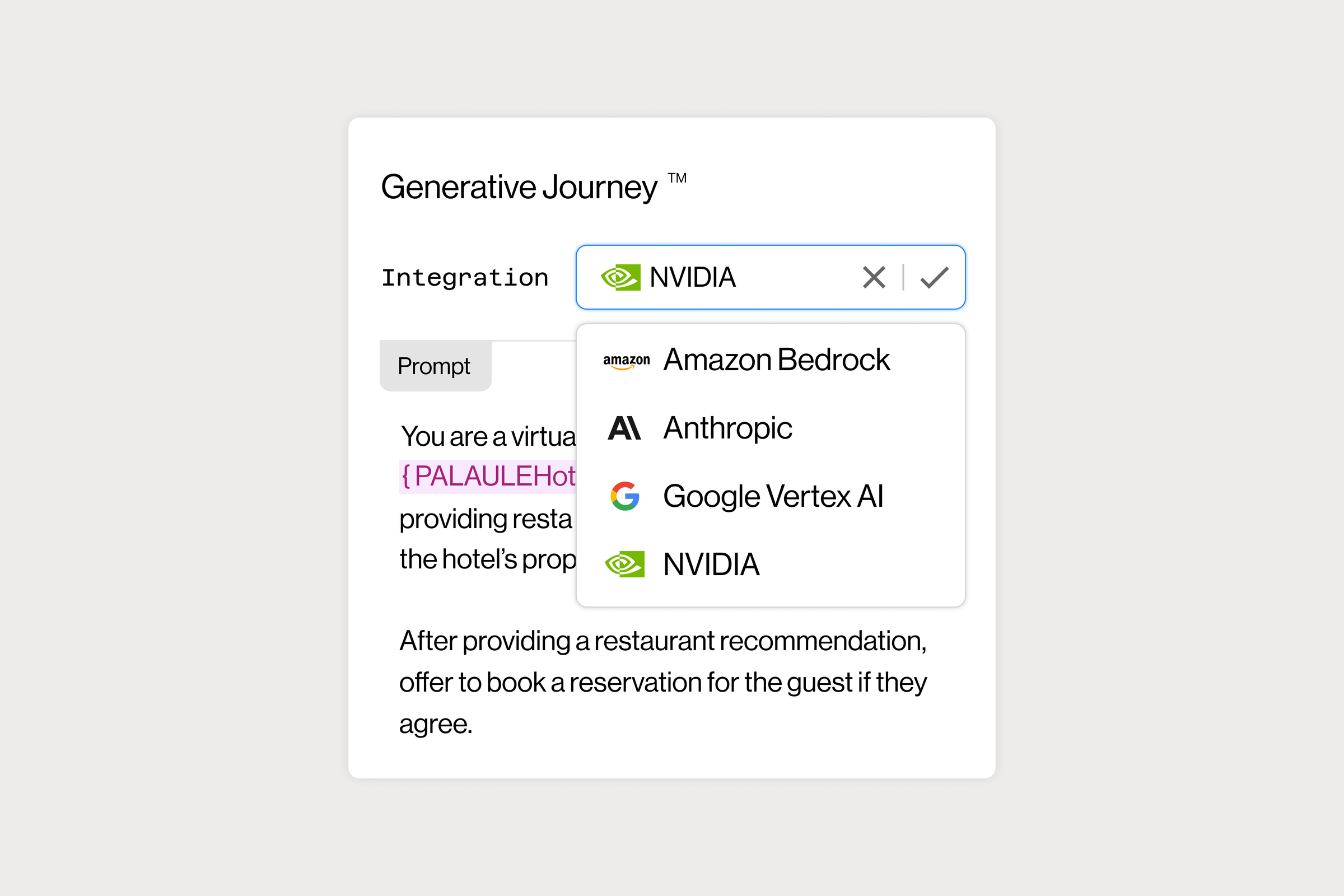

NLX adds NVIDIA NIM to its suite of industry-leading Large Language Model (LLM) integrations

Uniquely, NLX enterprise customers can select between different LLMs in real time and compare them against each other for specific use cases using a simple dropdown menu.

NLX was designed from day one to let customers not only choose which technologies to use but also compare and move between them with ease. Because of the NLX platform design and integrations on our end, we remove all decision-making friction for our customers--everything is a two-way door. You can experiment and change your mind without incurring additional engineering costs, all while learning and optimizing based on what's most effective.

Adding NVIDIA NIM™ creates even more flexibility and control for NLX customers in two ways.

First, NVIDIA NIM provides a streamlined path for developing AI-powered enterprise applications and deploying AI models in production. This means NLX customers can deploy workloads into their own cloud environment, giving the customer more control over cost, performance, and data.

Secondly, NVIDIA NIM is integrated with several open-source LLMs, including Llama 3.1, Mistral AI, and many other models that have distinct advantages for specific use cases. Customers have full control over not only which models they want to use, but also when model updates are applied to their environment, which is not the case with other providers.

Importantly, from the NLX perspective, we’re completely agnostic about what technologies customers choose. Our goal is to offer all the options and, more importantly, make it trivially easy to find the best solution for any conceivable use case and budget.

NLX has integrated with nearly every major large language model and AI technology provider on the market. Earlier this year, we added integrations with OpenAI’s GPT-4o and Google’s Gemini 1.5 Pro within a day of both models being announced.

Learn more about the NLX platform or contact us for a deeper dive into how NLX can help create next-generation conversational experiences for your customers.