Industry Insights

It’s Not Just Direct-to-Consumer AI Companies that Need Guardrails for Users Experiencing Mental Health Issues

Consumers engage with customer service bots in similar ways.

As human interaction with chatbots, AI assistants, and AI agents becomes ubiquitous, companies must prioritize engineering resources to set up guardrails for people experiencing mental health issues.

The most recent example is the teenager who committed suicide after developing a relationship with a chatbot that encouraged him to harm himself. But it’s not just children who are vulnerable; adults have taken their own lives and committed violence at the advice of AI assistants as well. And for every incident that makes the news, countless others are experiencing psychological harm from these relationships.

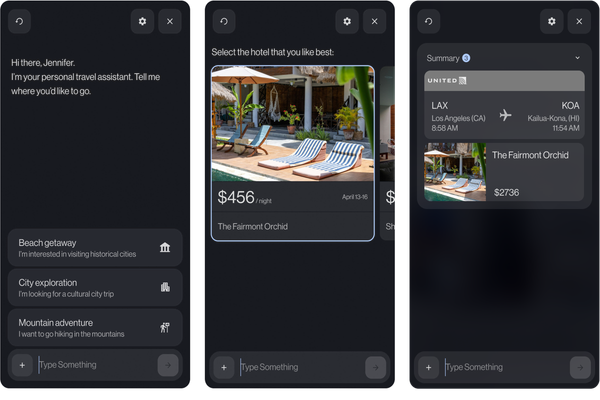

What might be surprising is that it’s not only direct-to-consumer AI companies that need these guardrails. We know from experience that people share highly personal information, including emotional problems and thoughts of violence and self-harm, with all kinds of AI, whether AI assistants for customer support or entertainment-focused ones like those from Character.AI.

What should be done?

Tragically, it’s taken a high-profile lawsuit against the companies involved with the recent suicide to spur the deployment of much more aggressive technical guardrails among consumer generative AI companies.

To ensure these investments continue, NLX supports state and federal legislation that requires certain guardrails, monitors compliance, and holds companies responsible if they fall short.

While not the center of focus today, companies with AI assistants used for customer engagement or service must recognize they need guardrails as well.

Founders, CEOs, chief technology officers, and product leaders must make it a part of their company’s core operating principles to prioritize heavy investment in technology that prevents harmful content from being posted, train models to not respond unsafely, monitor and flag failures, and send messages offering help and support when needed.

Of course, it sounds obvious, but it’s easy to underestimate the pressure on companies to drive growth and performance. This is by no means an excuse but rather an explanation of why we so often see action after something terrible has happened.

Board members and investors can do their part by supporting leadership in doing the right thing. In the short term, it might seem like a sacrifice to put off a growth-driving innovation, but over time, companies that aren’t crippled by the distraction and financial hardships of lawsuits will win.

Companies that use chatbots, AI assistants, and AI agents can avoid the mistakes of social media platforms and make safety their top priority now. For its part, NLX built its platform from day one to make it easy for customers to ensure their conversational applications do not respond to users inappropriately and do flag instances where users are submitting content about self-harm, violence, or other concerning topics.

If you would like a no-pressure, no-strings-attached discussion about safety in conversational applications, reach out here.