Industry Insights

Don't Let your AI Stutter

We’ve spent the last two years obsessing over the brain, the reasoning, the logic, and the depth of the system when it comes to generative AI. But we’ve largely ignored the reflexes.

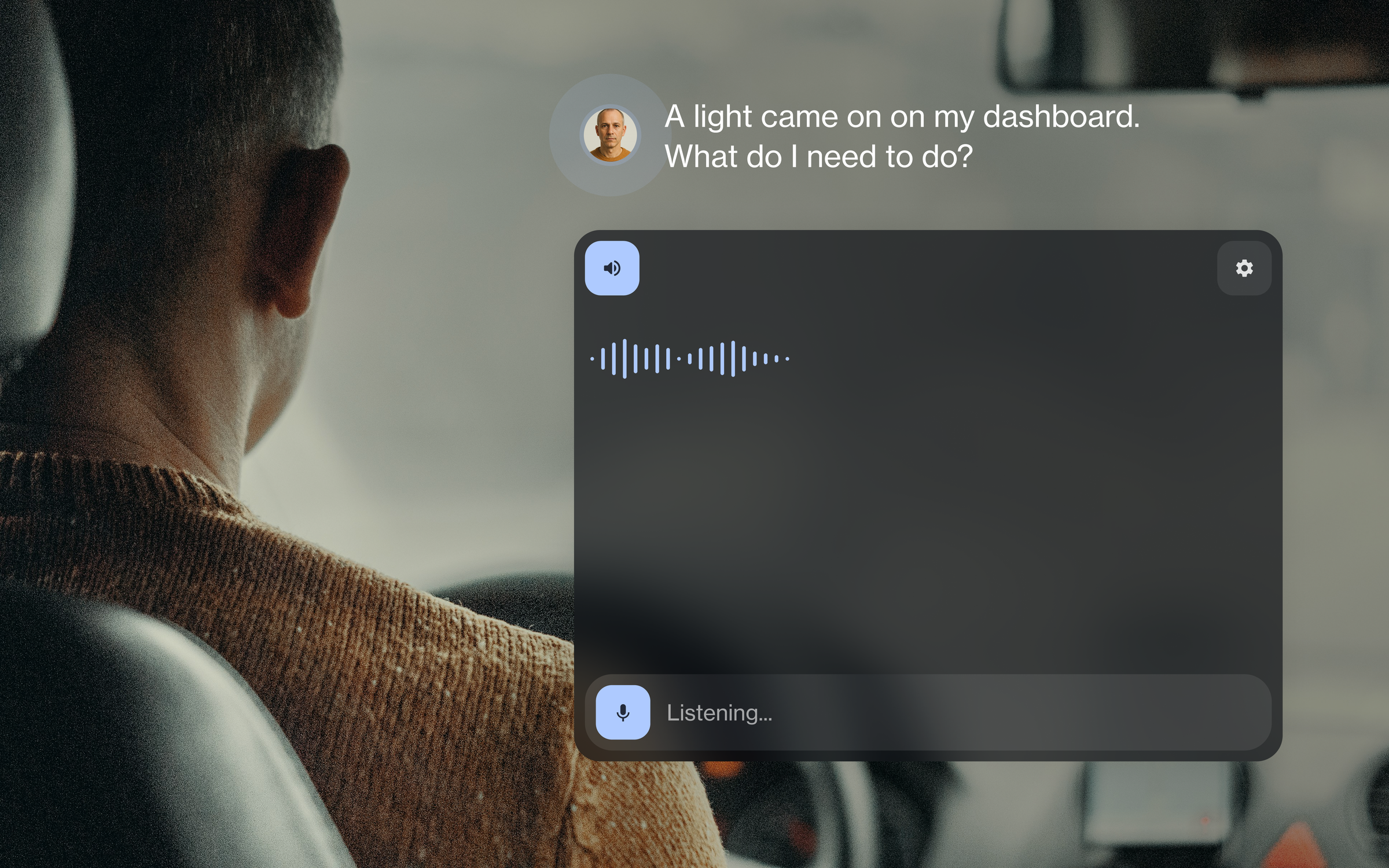

Intelligence is meaningless without agility. While a sophisticated answer is the goal, the reality of shipping AI is often defined by the stutter, that awkward multi-second void where the system sits in silence while the model processes an intent. In a live conversation, especially in voice, that silence isn't just a technical lag; it’s a brand statement. Every second of dead air is a moment where your customer loses confidence, stops believing in the system, and starts looking for the exit.

In other words, latency is the new wrong answer. A perfect response delivered three seconds too late can become functionally useless. The moment a user has to ask, "Are you still there?", your automation has failed its most basic task: maintaining the illusion of a seamless interaction. In the eyes of a customer, silence communicates a system error, and customers don't wait around for errors to resolve themselves.

At NLX, we provide the orchestration layer that turns raw AI reasoning into a high-performance conversational tool. We don't just help you find the right answers; we give you the controls to deliver them at the speed of thought.

Why latency is the silent conversion killer

Latency in conversational AI is the gap between a user finishing their sentence and the system delivering a meaningful response. It happens everywhere: the time it takes for Speech-to-Text (STT) to transcribe, the thinking time of the LLM, and the synthesis of the voice output.

When latency spikes:

- User flow breaks: Humans expect a response cadence of about 200ms in natural conversation. Anything over a second feels like work.

- Confidence drops: Dead air creates anxiety. Users wonder, “Did it hear me? Is it broken? Is it even working?”

- Costs rise: High latency is often a symptom of over-engineered, heavy-handed LLM usage that drains your budget without adding value.

The reality is you can’t fully solve latency. Physics, API hops, and model weights are stubborn things. But while you can’t always remove it, you can control and counter it.

Controlling the uncontrollable: the NLX orchestration layer

NLX provides a strategic orchestration layer that empowers your team to navigate the inherent delays of generative AI.

We treat latency as a manageable variable, giving you the specific tools to fine-tune your conversation’s pace:

- Optimized native voice channels: Our native voice channel is built from the ground up for low-latency performance. By utilizing under-the-hood optimized models for transcription and synthesis, we shave off the milliseconds that generic integrations lose. We also support streaming and interim messaging, allowing the system to begin communicating before the full response is even finished processing.

- Selective logic: Speed meets strategy here. When building a workflow for your conversational AI, NLX gives you the power to choose between deterministic logic (for instant rule-based responses) and generative reasoning steps. Our transform step also allows you to adjust or validate data before it ever impacts routing or modality rendering. This prevents the LLM from wasting time trying to clean messy input that a simple rule could have fixed instantly.

- Smart caching and just-in-time APIs: Why ask the same question twice? NLX allows builders to assign tools and call APIs only when necessary. By caching information within the same conversation session, we reduce redundant data-fetching and redundant LLM reasoning, ensuring the system retains without having to re-process.

- Rule-based (non-LLM) guardrails: While safety is paramount, it shouldn't be a bottleneck. NLX allows you to program guardrails with hardcoded rules and logic that don't rely on expensive LLM reasoning. By checking for PII, profanity, or SKU formats using regex or keywords, you maintain a safety net without adding a single millisecond of model delay.

Pro-tips: how to win the race against the clock (latency)

Tip 1: Use interim messaging: On our native voice channel, keep the user engaged with real-time updates while the heavier processing happens in the background.

Tip 2: Offload the routine: If your AI is asking for a ZIP code, use a deterministic node. Don't waste an LLM's brainpower on a simple form field.

Tip 3: Cache your session data: Use NLX session variables to store API results so that subsequent turns don't require a fresh round-trip to your database.

Tip 4: Design for clarification: It’s faster to ask "Did you say Order 426?" via a quick rule-based check than to wait for an LLM to hallucinate a guess based on bad speech-to-text transcription.

Common questions on AI latency

What is the ideal response time for Conversational AI? For a natural feel, systems should aim for a "Time to First Token" (TTFT) or response start under 500ms to 1 second. Beyond 2 seconds, user satisfaction scores typically begin to drop.

Can you eliminate LLM latency entirely? Not entirely. Large models require significant compute time. However, you can mask latency using conversation design (like "filler" phrases) or bypass it by using deterministic nodes for routine tasks.

What is 'deterministic vs. generative' logic? Deterministic logic uses pre-set rules (if/then) to provide instant responses. Generative logic uses LLMs to create a response. Using a hybrid approach, which NLX supports, allows you to choose speed for simple tasks and intelligence for complex ones.

The bottom line

You shouldn't have to choose between a smart AI and a fast one. With NLX, you have the opportunity to be strategic. You have the tools to swap, clean up, and control your AI’s performance in real-time.

Ready to see where your conversation is breaking down?

Try experiencing the power of NLX Analytics and our low-latency toolset, today. Book a demo.