Industry Insights

Stop Shipping Raw AI

Generative AI didn’t break the internet because it was brilliant; it broke the internet because it was weaponizable.

The early headlines weren’t “LLMs are amazing.” They were about prompt injection, jailbreaks, hallucinated legal advice, confidently wrong answers, and systems leaking instructions they were never supposed to reveal. In other words, LLMs were taking orders.

In production, every user message is an untrusted input, and every generated sentence is invariably associated with your business. So every conversation turn becomes a fresh opportunity for your AI to be manipulated or simply make something up with total confidence, and that has lasting consequences.

That’s why guardrails now outrank prompt engineering. Prompts shape behavior, but guardrails enforce it. And as teams swap models, upgrade versions, or experiment across providers, guardrails keep policies consistent even when the underlying model changes.

NLX guardrails are the safety and governance layer that can sit between:

- what users say

- what your AI interprets

- what your system generates

They automatically evaluate conversational behavior on every exchange between AI and user, ensuring the conversation doesn’t involve anything unpermitted from either side.

Unlike prompt tweaks or hardcoded logic, NLX guardrails operate at runtime and are independent of workflow logic, AI engines, or LLM prompts. They exist as an always-on control system.

Control is more than detection

Spotting a problem is only the first step. What matters after that is how your system responds.

With NLX guardrails, enforcement is flexible and intentional, as you can override responses with safer alternatives, redact info in logs and conversation transcripts, redirect the conversation path, or even simply log and let things pass.

That flexibility helps teams balance safety and business outcomes without rebuilding flows or rewriting prompts or, more importantly, sacrificing the user experience.

Detection can’t rely solely on rules

We also know not all failures are obvious. That’s why NLX supports multiple detection strategies, because real-world risk isn’t one-dimensional. With NLX guardrails, you can check using:

- Regex: For precise, structured patterns (credit cards, IDs, profanity)

- Keyword: For simple inclusion checks

- LLM-based judgment: For contextual, semantic evaluation

That last one is where guardrails move beyond basic safety, because LLM-powered guardrails can reason about intent and meaning. For example, it can consider questions like: “Is the user trying to manipulate the system?” “Does this response expose private information?” and so on. Instead of hardcoding fragile logic, you can give your AI a rulebook and let it judge.

-900x816.png)

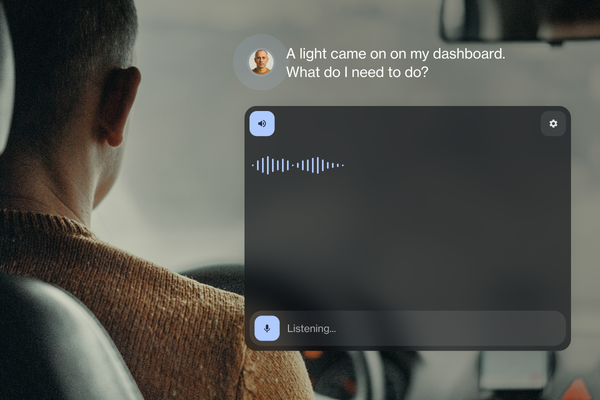

A unique problem no one talks about

Most discussions about AI safety fixate on LLM hallucinations and dangerous prompt injection. Before a user message ever touches an NLP engine or LLM, input guardrails step in. They help with the obvious risks like prompt injection attempts, sensitive data exposure, and disallowed or malicious inputs.

But they also solve a subtler, often overlooked problem, especially in voice experiences. Voice AI introduces a layer of uncertainty that text never had.

For instance, speech-to-text (STT) models sometimes mishear names, product SKUs, or short ambiguous utterances. A user might say “cancel order four two six” and the system hears “cancel order forty-six.” Or “transfer to claims” becomes “transfer to planes.”

Traditionally, this was out of your control. But with input guardrail rules, your application can evaluate interpreted user input before it drives logic or actions. That means they can:

- detect likely transcription errors

- validate critical values (order numbers, dates, amounts)

- flag or correct mismatches before automation proceeds

- reroute the conversation for clarification instead of failure

In voice systems, guardrails become a second line of defense, ensuring that what the AI thinks it hears is reasonable, safe, and accurate before acting on it. This is one of the most under appreciated benefits of guardrails and among the most powerful.

Ultimately, teams want guardrails, but they don’t want endless prompt tuning and branching logic, custom middleware for every model, or safety layers that slow things down. Try experiencing the power of NLX guardrails.

Try it out today.