Thought Leadership

The Market Finally Acknowledges the Often Messy Operational Reality of Enterprise AI

You might have noticed a shift in the narrative coming out of Silicon Valley. For the past two years, the promise was absolute: Give us a year, and autonomous agents will handle your shopping, book your travel, and run your business.

But as we enter 2026, the reality is setting in.

Recent reporting from The Information highlights that OpenAI’s ambitions for agentic commerce have hit a wall. How? Not because the models aren’t smart enough. They are brilliant. They hit a wall because of the messy data reality of the real world.

At the same time, TechCrunch reports that OpenAI is aggressively pivoting to capture enterprise dollars this year.

As someone who has spent their career building AI systems at scale— first at American Express and now at NLX— this is validation. We are finally moving past the magic button phase of AI and entering the phase of operational reality – and the market is finding it’s a lot easier said than done.

The smartest model in the world is useless if it cannot navigate the chaotic, fragmented, and regulated infrastructure of a modern enterprise.

The last mile is a marathon

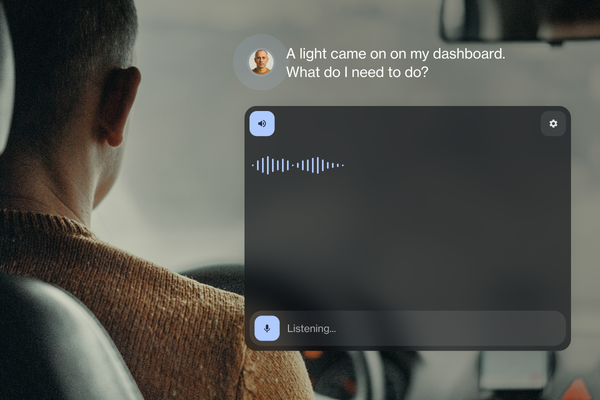

The struggles reported around OpenAI’s shopping agents illustrate a problem we solve every day at NLX. The premise was that an AI agent could simply browse the web and buy things for you.

But in an enterprise, data doesn’t live on a neat, publicly indexable webpage.

In retail, real-time inventory is buried three layers deep in a legacy ERP system.

In travel, flight availability is locked behind complex PSS (Passenger Service System) gateways.

In banking, transaction data is siloed in mainframes that haven’t been updated since the 90s.

When Big Tech companies promise that their AI will just work, they are underestimating the sheer complexity of connecting a probabilistic LLM to these deterministic, rigid business systems– something I called out a year ago as the frenzy around agents grew to a fever pitch. They are finding out that you can’t scrape your way to a transaction.

Why smarter doesn't mean safer

The narrative was that 2025 was supposed to be the “Year of the Agent.” It stumbled because reliability wasn't there. For a consumer using ChatGPT to write a poem, a 90% success rate is a miracle. For an airline using AI to rebook a stranded family during a snowstorm, a 90% success rate is a PR disaster.

This is the disconnect. The giants are building for capability—making models that can reason better. But enterprises need reliability—systems that do exactly what they are told, every single time, without hallucinating a refund policy that doesn't exist.

The enterprise needs orchestration, not just intelligence

Buying access to a powerful model is not the same as buying a solution. To make AI work at the scale of an airline or a global retailer, you need:

- Structured Orchestration: To ensure the AI follows your business rules, not just its training data.

- Integration Layers: To bridge the gap between modern APIs and 20-year-old databases.

- Guardrails: To stop the AI from making promises your legal team can’t keep.

The path forward

I remain incredibly bullish on the future of this technology. The capabilities of the underlying models are staggering. But the lesson from the recent stalling of agentic products is clear: you cannot bypass the complexity of the enterprise.

You have to build through it.

At NLX, we don't promise magic. We promise a platform that understands the nuance of your operations. We don't try to replace your messy data reality; we give you the tools to master it.

So, as the hype cycle cools and the hard work of implementation begins, remember that the companies that win with AI won't be the ones waiting for a super-agent to solve everything. It will be the ones building the infrastructure to make today's AI actually work.